🛣️ Our roadmap to Personalized AI and AGI

We will not train a trillion parameter model: A <100B active parameters is all you need.

If you want to find out more about the latest QRWKV1 model, that makes all of this possible, it is recommended to read this first:

In the past quarter, we have seen the following breakthroughs in open-source

GPT-4o Mini class open models (deepseek, qwen-32b) on our platform

72B Attention-Free QRWKV model built on 8 GPUs

Both with more knowledge and capability than an average human

A tipping point with RWKV v6 and v7 improvements

As such, my prediction (Eugene Cheah) for 2025 is that we are at the inflection point.

Where we scale towards model reliability, with better memories

Instead of scaling a trillion parameters

Our vision for AGI in summary

The video above, is the condensed version of our vision for personalized AI & AGI

The writing below, is the longer form with some additional details

The scaling wall for bigger models

The problem with scaling today is within the fundamental promise is for a step-increased improvement in capability, for every 10x in parameter size.

While it has remain somewhat true, that there is a step-up improvement in capability. The problem is in the diminishing returns, on both training and inference costs.

We are now within Billion’s of investment dollar range for the current GPT 4.5 model, with no clear answer if OpenAI Super AGI goal is achievable with another 10x, or 100x, or 1000x. After which, we are starting to talk in Trillions of dollars. Just for training. With even more dollars required to actually run the model.

While this path might still make sense, potentially for “Super AGI”.

It makes no sense for “Human-level AGI”. Because ….

Short Context AGI - is already here (sort of)

For the vast majority of users on applications like Character.AI, within that context window of approximately 8,000 tokens.

These AI characters, within the window, is AGI, with perhaps some flaws, but a unique character experience regardless. It’s what drive the 28 Million plus users to keep engaging with the platform.

The limits are however clear

How they are unable to reliably follow instructions within memory

Nor are they able to gracefully handle memories beyond their context length

The lack of reliable AI memory

What holds back AI (and AI agents) is …

The lack of reliable understanding, in memories

Because here lies an irony, these models are no doubt knowledgeable and capable.

Today’s best models for both open source (ie. DeepSeek R1) and close source, for example, is no-doubt capable of doing PhD level math and physics, with some degree of reliability (let say 1-out-of-30 times)

But yet they lack the reliability to do basic college-level task (30-out-of-30 times), be it as a cashier, or any simple agent needing to do a long multi step process.

This is also known, as the “Compounding AI Agent Error” problem

And fixing the reliability problem does not need a bigger model. We already have proof of this as observed across our platform…

How reliability is being solved in production today?

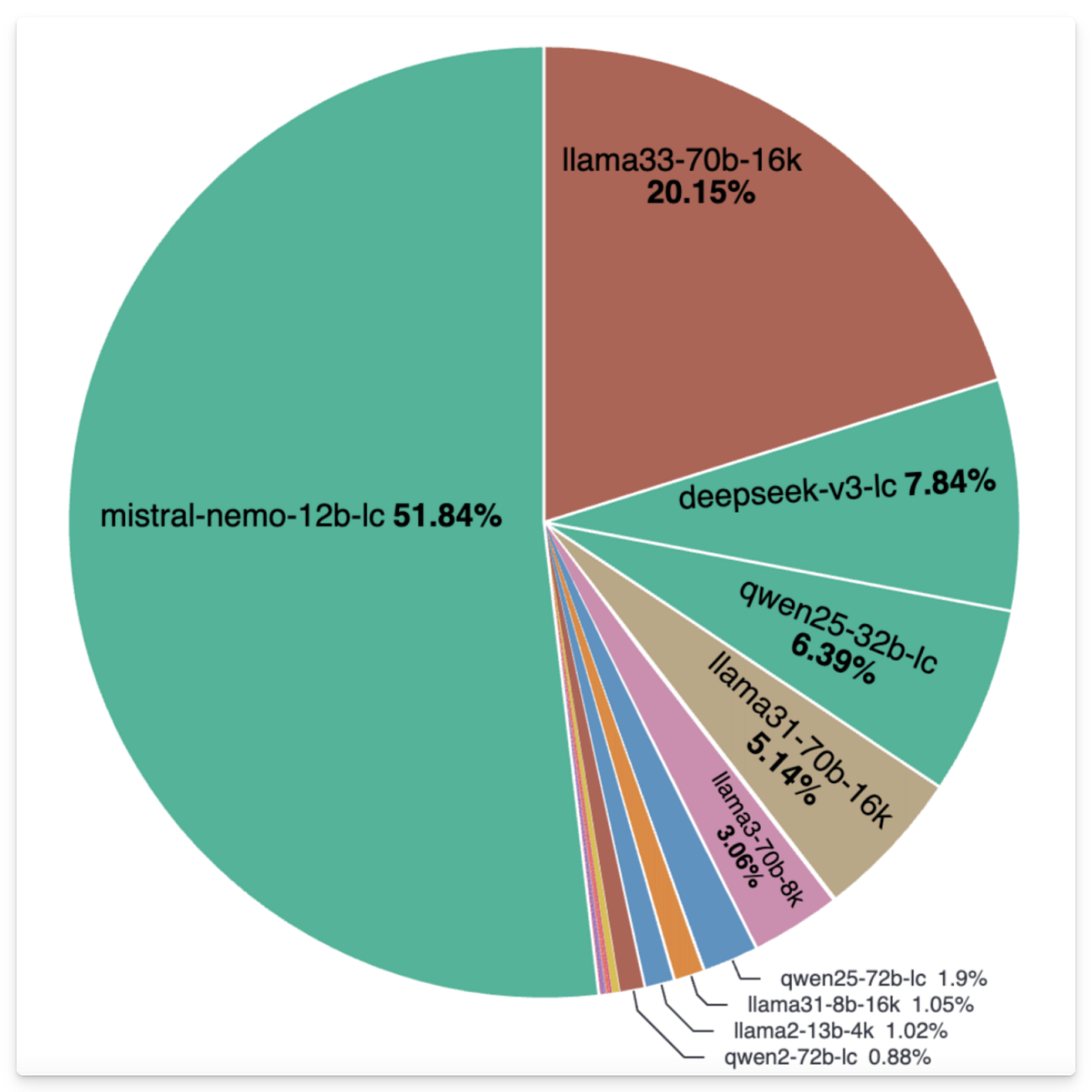

One of the interesting patterns we have observed on our platform is the workload differences between individual and scaled-up commercial use cases at featherless

For example, in the above, we show, how our individual users are running thousands of fine-tuned model - with a bias towards the top models like the DeepSeek R1, or LLaMA3 70B as expected.

But this dramatically changes when we see the models running by commercial users in production at scale.

The graph is not a mistake, the vast majority of the production workload we see at scale (by request or token count). Is the 1-year-old mistral Nemo-12b (including both base and finetuned)

Not the latest model

Not the biggest model

That is good enough, for predictable prompt engineering or finetuning

This is consistent with AI systems and agents in production at scale, where either or both of the following solutions are used.

AI Engineering: large problems are scoped, and broken into smaller tasks, solvable via prompt engineering

Finetuning: specialized domain-specific model is finetuned for specific tasks

In both cases, significant engineering effort is required

to design the AI agent, and its workflow, with prompt engineering

to calibrate the dataset for finetuning, requiring dedicated specialized talent. Due to the limitations of catastrophic forgetting

The later, is typically reserved for larger teams, due to the difficulty in scaling the resources required to “get it right”. Due to the highly trial-and-error nature of the process involved in both tasks.

Catastrophic forgetting: Is the challenge faced by all existing model, where existing knowledge and capabilities is lost. While adding in new knowledge.

For reliability at scale, it’s less about model size …

And more about, designing the task, and breaking it into reliable parts.

Prompt Engineering for initial results, and PMF (Product Market Fit),

Finetuning for longer-term reliability.

How RWKV memory module can solve this

If all AI models are already “capable” enough to potentially handle the vast majority of commercial tasks. Especially when they are finetuned for high reliability.

A recurrent model, with memories being part of its core design. Dramatically simplify the process of “finetuning”. In particular around the topic of catastrophic forgetting

For example, it is possible to “memory tune” the memory module state (Time Mix, in the diagram), which by default is left as blank in our base models.

This will not induce any Catastrophic forgetting, as none of the initial model weights is modified. A process we been testing, and implementing for a few pilot customers.

Recurrent models, also by their nature/design, is trained to handle memories, much closer to how we humans do so. Which makes it naturally more scalable for solving the reliable memory problem transformers face.

It also brings about multiple additional benefits, such as 100x lower inference cost (due to its linear scaling nature).

But more importantly, it’s not about what it can do today.

But what we can build tomorrow, iterating on this technology path.

We are by no means perfect today (or ever),

it is why we iterate, more than anyone else

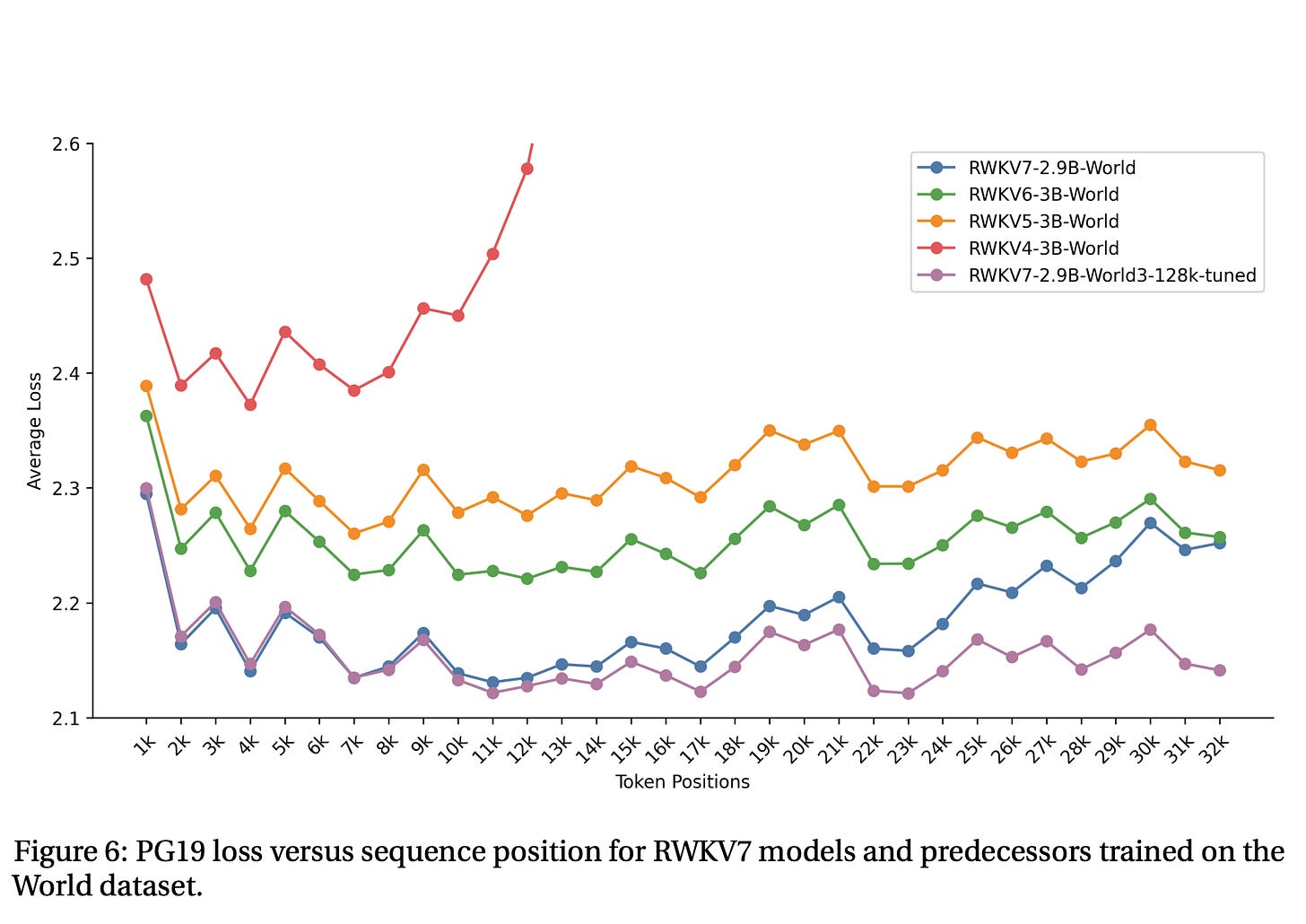

The RWKV team, is arguably the only team today, who has been consistently be making step function improvements in our architecture every half a year.

This can be best seen by our model performance chart across the past 2 years, from version 4 to version 7.

A process that we intend to accelerate, moving forward.

How to achieve Personalized AI

With the appropriate resource support. We expect to iterate into a reliable version of personalized AI within the next year or two.

We define this, as being able to “Memory Tune” without Catastrophic forgetting, and with high accuracy in narrow tasks. With 100 Million tokens or less. Into production use cases.

Quickly, and without the need for highly specialized professionals.

This is not considered “AGI”, because it will be constrained up to what it was “designed to be trained on”. As it would not permanently be learning new knowledge.

However, this is not an issue for most commercial use cases, as it would become the workhorse that drives the AI agent adoption cycle.

And make it → Personalized AGI

Once personalized AI is mastered, it would allow us to focus on the next step - to automate the process for the model to collect and reflect on their “day-to-day” interaction. For further memory tuning “in the night”.

For most part, this would be done incrementally, instead of a giant leap.

This is due to how its an extension of the Personalized AI memory tuning process.

The main differentiator, however, is we would now allow the model to decide on new knowledge and information from its day to day experiences to “learn from”.

However, we do not expect this automated tuning process to be infinitely stable. Due to potential memory loss in between past a certain scale. Be it a billion or a trillion tokens.

However, what this will simply translate to, is a lifespan for a continuous Personal AGI agent. One that can span days, to weeks, months, and eventually years for each iterative improvement.

Why all of this is inevitable

It is either a binary outcome, can recurrent models, be iterated and scaled (not just by param size), to be more reliable, and have better memories.

We have provided evidence that the above statement is true, on a progressive trend for 2 years, despite the limited resources invested in the team.

We have provided evidence that recurrent models can scale up to match the capability of some of the largest open transformer models.

Assuming the above is true

The high-level roadmap is here, in public.

Better and more capable open transformer models will arrive,

which will speed up the process for us to iterate on.

(little to no training from scratch required)We have enough resources to keep scaling our inference platform,

while slowly iterating on this roadmap, with a handful of researchers.Even if we Featherless were to suddenly disappear. Enough resources and momentum within the open model space, will let future teams slowly eventually follow this same roadmap laid out.

Overall, we expect the following timeline, if featherless AI is properly supported in this journey, to seize the opportunity window

< 2 Years for personalized AI

< 4 Years for personalized AGI

Left on its own, with Moore’s law level of improvement in computing, we expect the hardware requirement to iterate on this roadmap to enter the “personal computing” space in 4 years. Once that is reached, we expect a rapid innovation cycle that leads to the same result, be it within or outside the USA.

So my question is: Do you want to support us in making this happen in 2-4 years?

And seize the opportunity with us. Or would you like to miss out on it when it inevitably comes

This model was originally published as Qwerky-72B. However, due to confusion with another similar naming company/model, we have been requested to avoid using the Qwerky name, so we have renamed our models to QRWKV-72B