Featherless Feud: a dip into LLM-powered game development

rebuilding a TV classic

It takes time to develop a sense of how to apply LLMs to concrete problems. But a great way to do this is to build with them; in this post, we’ll work through building a web version of the TV classic Family Feud.

This is a game with elements that are quite challenging for “traditional” software development that are remarkably easy with an LLM. If you want to try what we built before reading further, you can do so here!

The Challenges

Building a game requires solving lots of problems around input, output, UI, visuals, latency, the list goes on. We’ll be focussing on the content related challenges that are not easy for the “rules-based” nature of typical software.

When we think about building Family Feud - or Featherless Feud as we call this version - we hit two problems:

question and answer generation

fuzzy answer matching

i.e. how do come up with quiz questions, and when given answers that aren’t exactly on the list, how do we match them?

For the actual show, the question bank is built with the help of surveys of the general public, meanwhile identifying variation of official answers are done by show staff live, a natural language task which is simple for humans, but challenging for software.

In both cases, we’ll be prompting an LLM to generate a certain kind of text and interpreting the results, but beyond just prompting, how we run the inference also matters. Let’s dive in.

Part 1 - Question/Answers Generation

To generate questions, we might prompt an LLM like so

You are the producer of the game show Family Feud.

Your job is to devise a question and a list of common answers to this question. For each answer, output a number between 0 and 100 which is how common you think the answer would be when given by a member of the general public. The sum of the scores should not exceed 100.Now that content has structure (e.g. question versus answers) that we need to know about so that the various pieces can be handled appropriately by the different parts of our app.

The LLM “knows” about the relationship between the questions and answers in an output, so we can ask it to include the annotations in the output. We really could ask the LLM to output any kind of structured format but the most common format to request output in is JSON.

As for getting a model to formulate it’s output as JSON there are typically two parts:

prompting for the answer to be in JSON with a description of desired structure

requesting JSON from your inference API call

For 1., you might update your prompt as follows

You are the producer of the game show Family Feud.

Your job is to devise a question and a list of common answers to this question. For each answer, output a number between 0 and 100 which is how common you think the answer would be when given by a member of the general public. The sum of the scores should not exceed 100.

Be very concise, present only the question and answers, and don't add anything else.

Output valid JSON with the following format: two keys, `question`, which should be a string, and `answers` should be an array of objects. Each object in the `answers` array should have two keys, `answer` and `score`, each of which should be a string.

e.g.

```json

{

"question": "Name something that might be wobbly",

"answers": [

{ "answer": "Furniture" , score: 64 },

{ "answer": "Person/A Drunk", score: 15 },

{ "answer": "Spinning toy/top", score: 5 },

{ "answer": "Shopping Cart", score: 3 }

]

}

```

The sum of the scores should not exceed 100.Being prescriptive in structure is important; if the output description isn’t clear enough to the model, you’ll get variation in structure and keys used, which likely cause an exception when the part of your software operating on the JSON output tries to separate the question from the answers.

Though even with an explicit description of output structure, the model to output syntactically valid JSON is it is unlikely that if you prompting only will have your output will be valid JSON. Here’s a sample of the output from above:

Here is a question with a list of answers and scores:

{

"question": "Name something you might find in a purse or wallet",

"answers": [

{ "answer": "Money", score: 70 },

{ "answer": "Phone", score: 20 },

{ "answer": "Credit Card", score: 5 },

{ "answer": "Candy", score: 2 },

{ "answer": "Makeup", score: 3 }

]

}Pretty good right? It came up with a question, and in the format as requested (and needed for the game). It also outputted the `score` values as Numbers even though I asked, in the prompt, for their input to be strings! The model overrode my instructions, but for the better. However despite the request to give nothing but the output, there still is some pre-amble which requires it’s own intelligence to parse. How do we get rid of this?

The other half the equation is an inference technique known as “guided decoding” in it’s most general form, but known as JSON mode. Here we guarantee the output is syntactically valid JSON by manipulating token probabilities during sampling to prevent syntactically invalid JSON from appearing (e.g. the string {‘‘} is not valid JSON).

Of course these two modes are in tension with another - if you don’t ask for JSON in your prompt (or specify structure clearly enough), and put on JSON mode, it’s possible that your output bears little relationship to your input. In an early version of the prompt above, I got a number of questions.

How you request JSON mode varies by your API provider (this is a specific type of guided decoding which is a big topic), but many (most?) providers that have an API will accept the same format to the request as OpenAI, which is to include a

"request_format": { "type": "json_object" }in the body of the inference POST request. Once this is included, my output is exactly how I hoped: exactly the JSON and only the JSON.

{

"question": "Name something you might find in a garage",

"answers": [

{ "answer": "Tools", "score": 40 },

{ "answer": "Bike", "score": 20 },

{ "answer": "Work Bench", "score": 12 },

{ "answer": "Vacuum", "score": 8 },

{ "answer": "Lawn Mower", "score": 5 },

{ "answer": "Storage Containers", "score": 5 }

]

}Note generation takes longer when JSON mode is enabled. This is a consequence of how that guided decoding works, which is worth a blog post in it’s own right.

We haven’t talked about the Featherless API we’ve been using for inference, but hold on to that thought - more on that in a bit.

Part 2 - Fuzzy Answer Matching

The other challenge is matching players answers to the list of official answers, as they don’t always match, in our running example from the video above, the question “Name something that might be wobbly” had the following answers

Where “A stool” was accepted in place of “Furniture” and “A baby” was accepted as a version of “Person / a drunk”.

It’s relatively easy to devise a prompt and post-processing that captures the gist of the idea. Here’s a short python program we used during testing to let you iterate through prompt and model choice by letting an answer you supply on the command line is judged.

| from openai import OpenAI | |

| import os | |

| import click | |

| client = OpenAI( | |

| base_url="https://api.featherless.ai/v1", | |

| api_key=os.environ.get('FEATHERLESS_API_KEY') | |

| ) | |

| @click.command() | |

| @click.argument('answer') | |

| @click.option('--model', default='meta-llama/Meta-Llama-3-8B-Instruct') | |

| def quiz(answer, model): | |

| question = "Name something that might be wobbly" | |

| official_answers = [ | |

| "Furniture", | |

| "Person/A Drunk", | |

| "Spinning toy/top", | |

| "Shopping Cart", | |

| ] | |

| system_prompt = f"""You are a judge on the show Family Feud. | |

| You're going to receive a guess from a contestant. That guess is a guess at one of the answers to the question | |

| Question is "{question}" | |

| The official answers are | |

| {'\n'.join([ f"* {a}" for a in official_answers ])} | |

| If the candidate's guess is a version of an official answer, please respond the wording of the official answer. | |

| If the candidate's guess is not a version of any official answer, respond with "Survey says ... AERR!!" | |

| """ | |

| print(model) | |

| print(system_prompt) | |

| chat_completions_response = client.chat.completions.create( | |

| model=model, | |

| messages=[ | |

| { "role": "system", "content": system_prompt}, | |

| { "role": "user", "content": answer } | |

| ], | |

| max_tokens=250, | |

| ) | |

| print(chat_completions_response.choices[0].message.content) | |

| if __name__ == "__main__": | |

| quiz() |

But catching the comedic subtlety of the game is something else entirely. Despite many prompt iterations and many different models I tried (including Llama 3.1 405B!), I couldn’t get the judge to accept “A baby” as a variation of the answer “Person / A drunk”. If you have a different result, I’d love to hear it in the comments!

Choosing an inference provider

There is a growing list of inference providers to choose from. Every foundation model company provides API access to their models (OpenAI, Anthropic, etc.), companies focussed just on inference provision (friendli.ai, replicate.ai, together.ai), and aggregators (e.g. openrouter.ai).

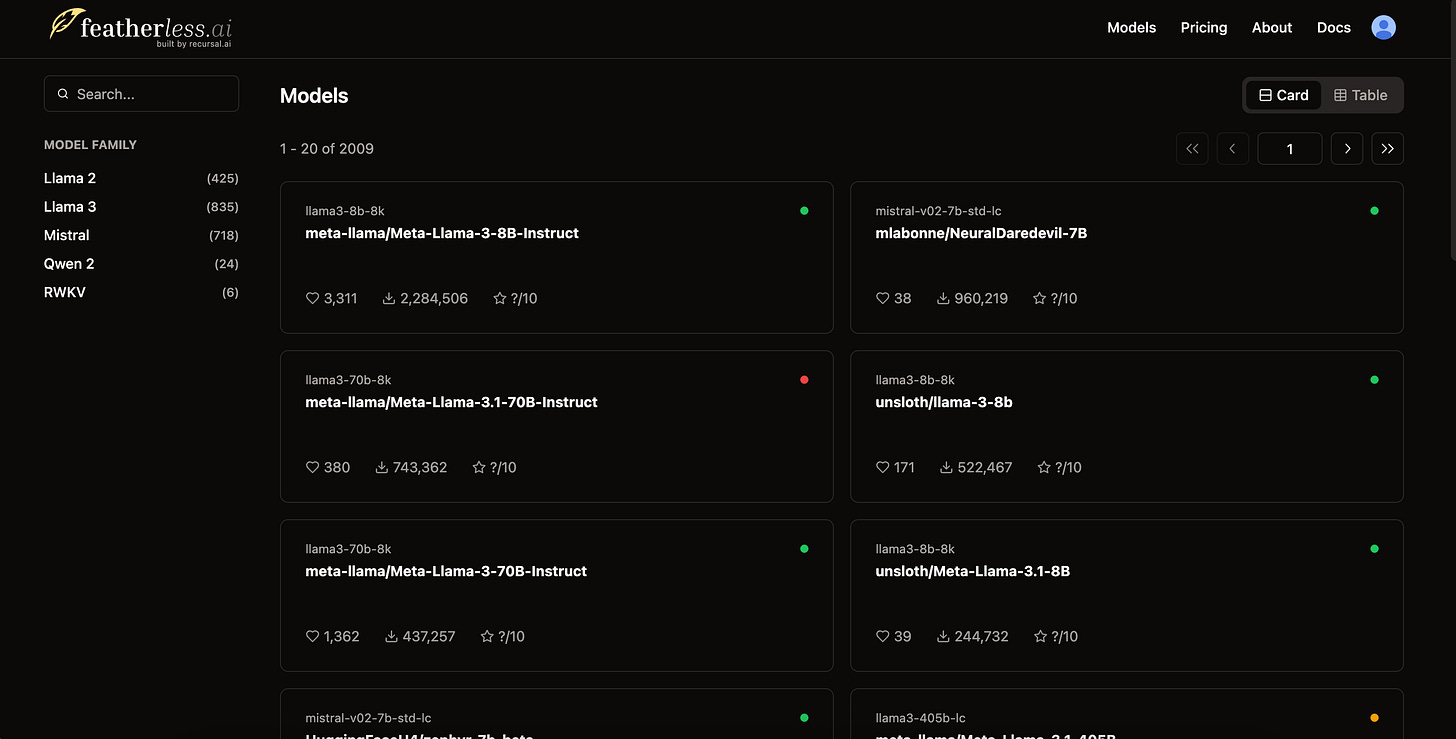

What’s unique about featherless.ai is the number of models available serverlessly, i.e. without paying for dedicated GPUs. With the exception of featherless, all other serverless providers only make the most popular models are available, since, behind the scenes, there is dedicated infrastructure to those models, those costs are just being amortized across a large enough group of consumers. Featherless is different. Every fine-tune of a large class of models (i.e. all fine-tunes of a specific set of base architectures) is available serverlessly. Our goal is to make every public model on HuggingFace available for serverless inference and we currently have over 2k models available.

The next closest service in model count is openrouter.ai, which aggregates providers, and has less than 10% of the available models.

Model Selection and Testing

Play testing is an important part of any game development, and as we were playing with Featherless Feud, we tried a number of different models. The primary issues were a lack of diverse answers or answers that were too ambiguous for the game format. We’ve left the utility box that overrides the model in the lower-right hand corner of the game; this showcases some of the strength of the featherless platform: take any of our 2k models from our models page, drop it into that selector, and the game is instantly updated. When contrasted that just model download times are tens of minutes for small models, you might appreciate this feat.

We tested a range of model architectures, sizes, and data-sets, including

failspy/Meta-Llama-3-8B-Instruct-abliterated-v3 - we chose this model to explore if the abilteration approach would increase the variety of questions in an interesting way (inconclusive)

qwen/qwen1.5-32b - The Qwen models feel very different than the Llama models - maybe that’s the culture of Alibaba versus Meta coming through …

meta-llama/Meta-Llama-3.1-70B-Instruct: While this 70B model offered superior judgment in evaluating user answers, its slower response time made it less suitable for rapid iteration during gameplay. However, it was useful in scenarios where answer accuracy was critical.

alpindale/magnum-72b-v1: Initially, this 72B model seemed promising due to its creative output. However, its tendency for erratic responses made it unsuitable for the structured requirements of Featherless Feud.

Sao10K/L3-8B-Stheno-v3.2: This 8B model was selected for its optimal balance between speed and output quality. It consistently produced valid JSON responses, making it a reliable choice for generating game content efficiently.

anthracite-org/magnum-32b-v2 - a qwen2-32b fine-tune intended for role-play

At a high-level, the more RP-focussed models tended to generate more variety of questions, and the bigger models didn’t generate content with a sufficient jump in quality to justify the longer running time. So we settled on Stheno as it provided a balance of variety of output with fastest inference time.

Try running the game with some of the models and let us know in the comments what you differences you notice!

Wrapping up

We learned a ton in this journey, and hope you did too. Perhaps most surprising is that capturing the comedic subtlety of Family Feud escaped us even when using the more capable (i.e. larger) open-source LLMs.

Thanks for reading! Let us know how you enjoyed this article, either in the comments, or with an email to hello@featherless.ai, and head on over to featherless.ai to experiment with the models listed here and more.

And again, check out the running game here